Deep Learning Project

This project focuses on classifying different species using neural networks. We preprocess the dataset, standardize the features, and encode the labels for efficient training. A fully connected neural network is built to predict the species, followed by hyperparameter tuning using Keras Tuner for optimal model performance.

We begin by loading the dataset, which includes multiple features describing various species and their corresponding labels.

import pandas as pd

# Load the dataset

train = pd.read_csv('train.csv')

train.drop('id', axis=1, inplace=True)

Before preprocessing, we explore the dataset to identify missing values, duplicates, and the overall structure of the data.

# Check missing values and duplicates

missing_values_train = train.isnull().sum()

duplicates_train = train.duplicated().sum()

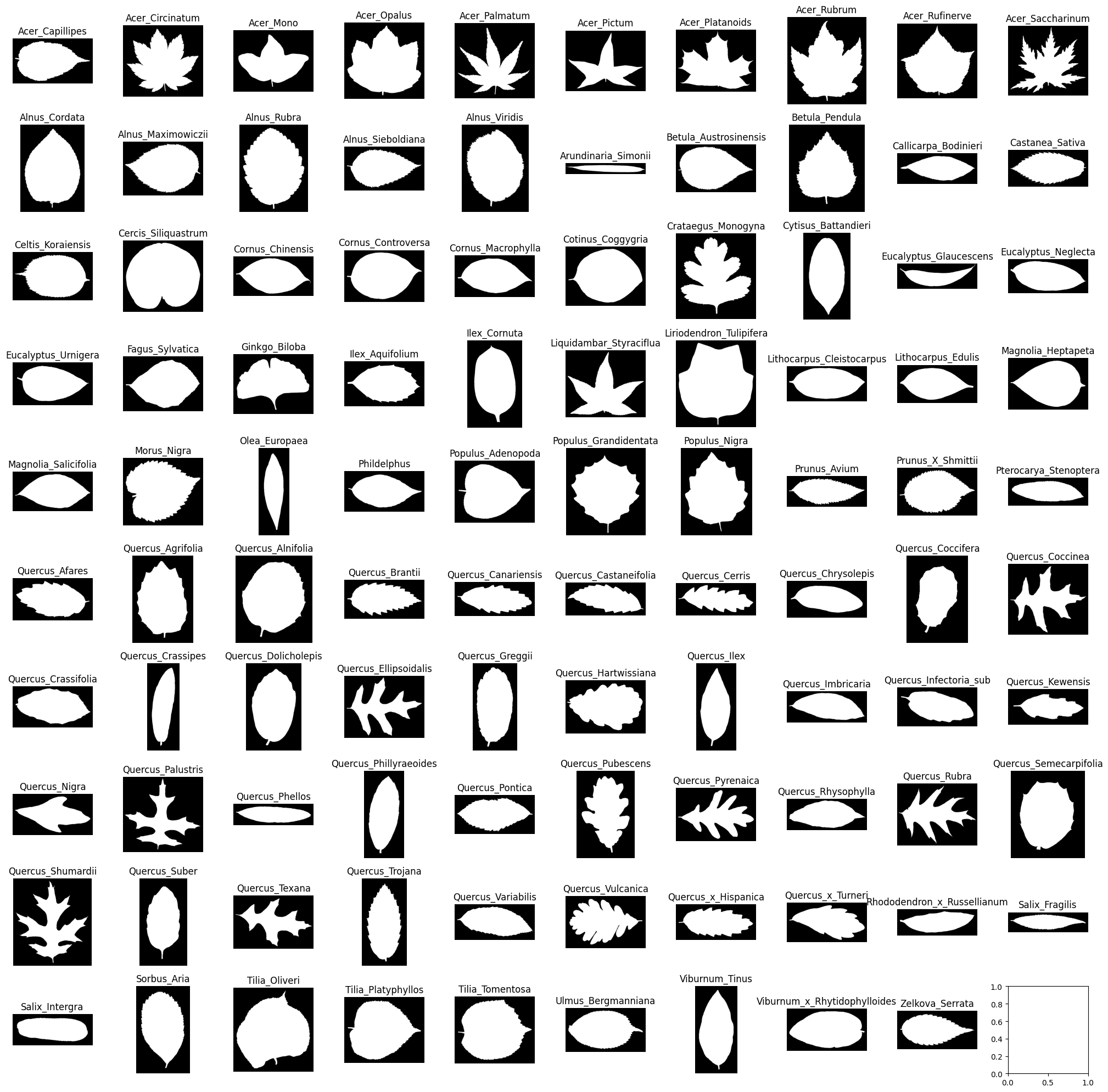

We visualize a set of sample images from the dataset to better understand the data distribution among different species.

import matplotlib.pyplot as plt

import os

import random

# Visualize images from each class

unique_classes = os.listdir('train_images')

fig, axes = plt.subplots(nrows=10, ncols=10, figsize=(20, 20))

axes = axes.flatten()

for ax, cls in zip(axes, unique_classes):

class_images = os.listdir(os.path.join('train_images', cls))

img_path = random.choice(class_images)

img = plt.imread(f'train_images/{cls}/{img_path}')

ax.imshow(img, cmap='gray')

ax.set_title(cls)

ax.axis('off')

plt.tight_layout()

plt.show()

We preprocess the features by scaling them and encoding the target labels. We use StandardScaler to standardize the features and LabelEncoder with OneHotEncoder to encode the target labels.

from sklearn.preprocessing import LabelEncoder, StandardScaler, OneHotEncoder

X = train.drop(columns=['species'])

y = train['species']

scaler = StandardScaler()

X = scaler.fit_transform(X)

encoder = LabelEncoder()

y = encoder.fit_transform(y)

y = OneHotEncoder().fit_transform(y.reshape(-1, 1)).toarray()

We split the dataset into training, validation, and test sets using an 80/10/10 split.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

X_test, X_val, y_test, y_val = train_test_split(X_test, y_test, test_size=0.5, random_state=42)

We create a fully connected neural network using Keras, with early stopping to prevent overfitting.

from keras.models import Sequential

from keras.layers import Dense

import keras

# Define the model

model = Sequential()

model.add(Dense(64, activation='relu', input_shape=(X.shape[1],)))

model.add(Dense(32, activation='relu'))

model.add(Dense(y.shape[1], activation='softmax'))

# Compile and train the model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

stop_early = keras.callbacks.EarlyStopping(monitor='val_loss', patience=2, restore_best_weights=True)

model.fit(X_train, y_train, validation_data=(X_val, y_val), epochs=10, batch_size=32, callbacks=[stop_early])

We evaluate the model’s accuracy on the training, validation, and test sets.

train_acc = model.evaluate(X_train, y_train)[1]

val_acc = model.evaluate(X_val, y_val)[1]

test_acc = model.evaluate(X_test, y_test)[1]

print("Training Accuracy:", train_acc)

print("Validation Accuracy:", val_acc)

print("Test Accuracy:", test_acc)

We optimize the model’s architecture and hyperparameters using Keras Tuner’s Hyperband method.

import keras_tuner as kt

def model_builder(hp):

model = Sequential()

model.add(Dense(units=hp.Int('units_input', min_value=32, max_value=512, step=32), activation='relu', input_shape=(X.shape[1],)))

for i in range(hp.Int('num_layers', 2, 20)):

model.add(Dense(units=hp.Int('units_' + str(i), min_value=32, max_value=512, step=32), activation='relu'))

model.add(Dense(y.shape[1], activation='softmax'))

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

return model

tuner = kt.Hyperband(model_builder, objective='accuracy', max_epochs=10, factor=3, directory='model_tuning', project_name='fully_connected')

tuner.search(X_train, y_train, epochs=5, validation_data=(X_val, y_val), callbacks=[stop_early])

best_model = tuner.get_best_models(num_models=1)[0]

We evaluate the best-tuned model on the training, validation, and test sets to confirm its performance.

train_acc = best_model.evaluate(X_train, y_train)[1]

val_acc = best_model.evaluate(X_val, y_val)[1]

test_acc = best_model.evaluate(X_test, y_test)[1]

print("Training Accuracy:", train_acc)

print("Validation Accuracy:", val_acc)

print("Test Accuracy:", test_acc)

This project demonstrates the power of deep learning for species classification using a fully connected neural network. By preprocessing the data, tuning hyperparameters, and visualizing the dataset, we built an accurate and robust model for multi-class species classification. The Keras Tuner facilitated optimizing the network’s architecture for better model performance.

For the complete code and further details, you can visit the GitHub repository.

Interested in collaborating or have any questions? Reach out to me through this form.